Optimization in Swift, part 4

In Part 3 I wrote about trying to optimize my filter code by using an unsafe API from the standard library. This helped a tiny bit but barely improved the performance of my initial fictional use case (all about the test setup you can find out in Part 1).

Updating the Memory Buffer Concurrently

Looking at the results of both code variants so far, I got an idea — I could fuse together using concurrency to do the collection filtering and using a buffer in memory so I can update the array storage directly.

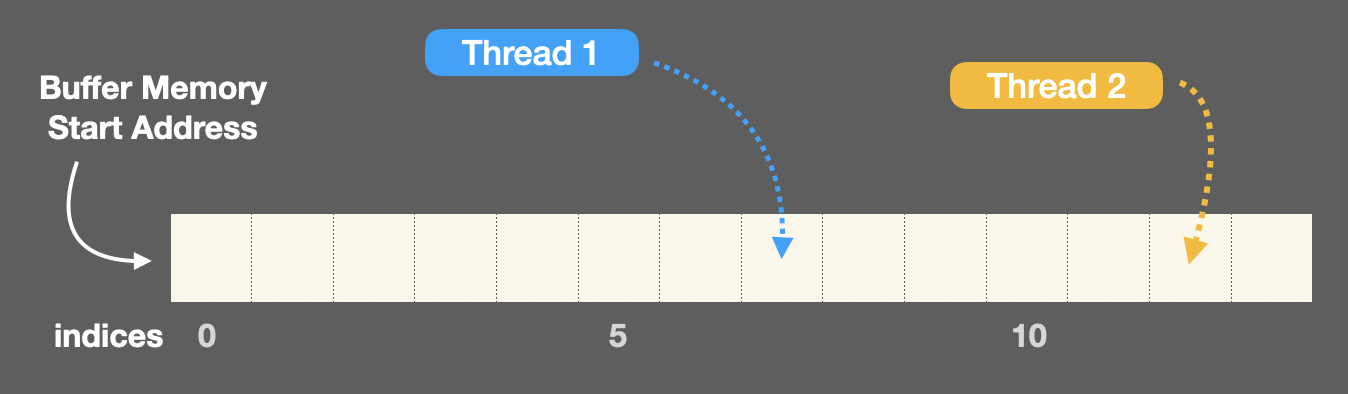

The interesting thing about writing to memory directly (instead of using the array APIs) is that I can concurrently write to different offsets without causing memory corruption.

In other words — if I know the offset of the array element I want to update, I can update that specific element without preventing other threads to write other elements in the same buffer at the same time:

In yet other words — I could filter the source collection concurrently and build the resulting array also concurrently.

Let’s see how would that look in code!

I’ll start, just like in the previous post, by initializing a new array with a buffer the size of the source collection:

|

|

There’s a problem though — Array.init(unsafeUniti...) takes a synchronous closure so I can’t use async/await to concurrently filter the elements…

Well, good news — Grand Central Dispatch is still alive and kicking and it has no idea that Swift has now asynchronous context. I’ll use DispatchQueue.concurrentPerform(iterations:execute:) to spread the work across multiple threads from inside the synchronous array initializer’s closure.

The plan to update concurrently the buffer is simple — I’ll write all passing elements to the result, and for all elements that should be filtered I’ll write a nil instead. Finally, I’ll compact the resulting array before returning it.

Here’s how the completed code looks like:

|

|

There is a lot to unpack in this code but, long story short, essentially each thread performs the filtering of one element at a time and updates the resulting collection offset with either nil or the source element. Finally, a simple compactMap { $0 } removes all nil elements from the result.

Exciting! Let’s see the results in Attabench.

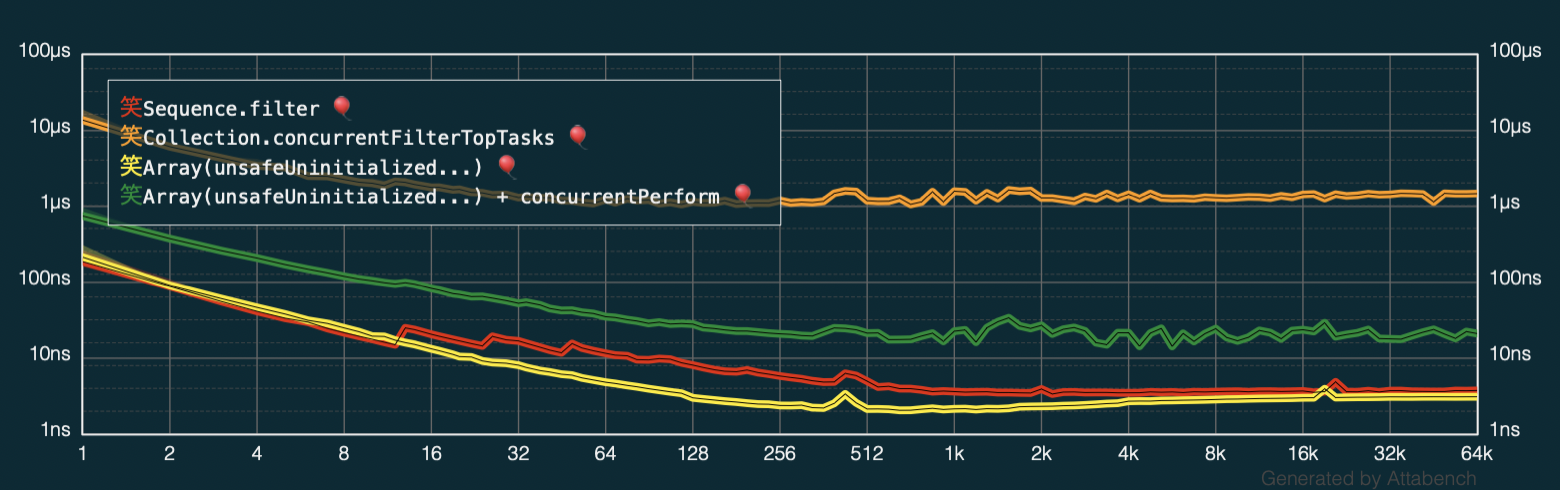

If you paid attention to the charts in the previous posts you’ve already likely guessed that DispatchQueue.concurrentPerform(...) (in green) didn’t improve the speed of filtering when using filterLight as the condition:

While performing better than the code variant using async/await (in orange), my latest code iteration is still much slower than Array.filter (red).

This result re-iterates what I mentioned in the first part of the series — optimize only when and where it makes sense… For the simple condition num % 6 == 0 neither adding concurrency nor using unsafe APIs would improve the duration metric.

But let’s see about the performance when filtering with filterHeavy — the function that does some SHA checksums before deciding which elements should be filtered.

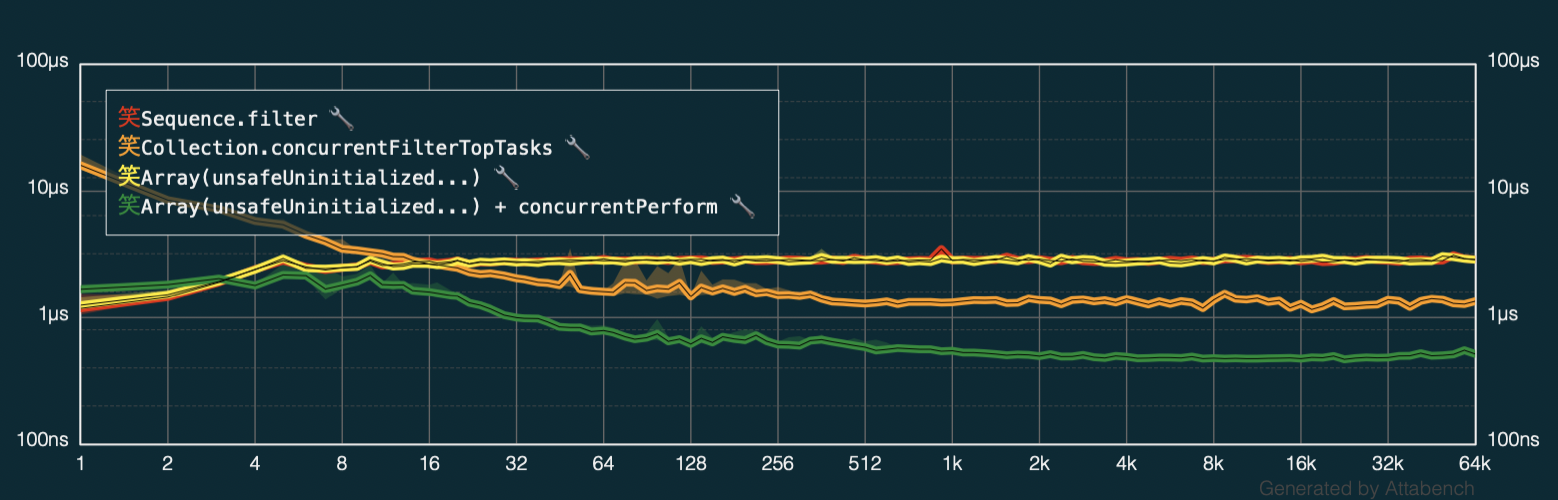

Plotting the latest code (in green) with filterHeavy against the previous variants looks like this:

The concurrent code that also uses an unsafe memory buffer is considerably faster than all earlier iterations!

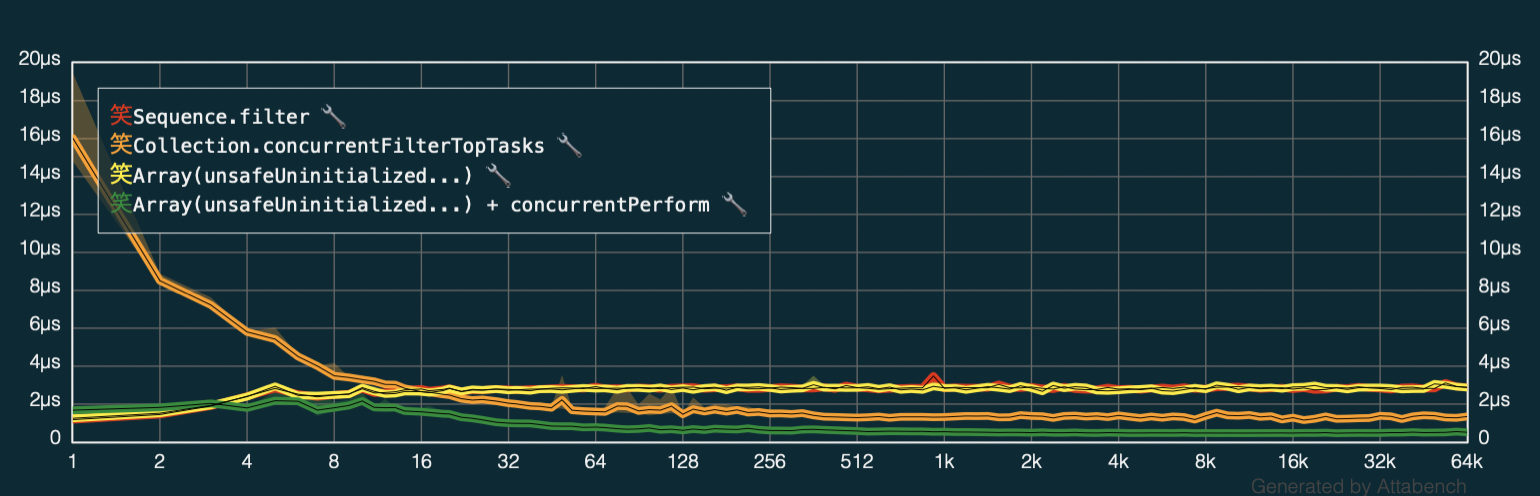

That’s pretty nice so let’s turn off the logarithmic scaling to see if I hit my (somewhat arbitrary) goal of slashing the duration in half:

The results look great — it looks like for larger collections the initial time spend per element is around 3µs and my final code needs around 0.5µs which is much better than my initial goal.

Conclusion

I think I’m going to wrap the series at this point. Before doing that, let me reiterate my sentiment from part 1 — every time you’ll have different constraints, that’s one of the reasons it’s so hard to optimize-in-advance.

The solution above uses a lot of CPU in order to reduce the duration and likely much more RAM than a simple Array.filter. Since my goal was to reduce the duration, all other metrics don’t bother me but in your case — always measure and find what works best.

I hope this mini-series showcased a little bit of my thought process and that was interesting to read about. If you want to discuss this code or have an even faster iteration to share — hit me up on Twitter at https://twitter.com/icanzilb !

Where to go from here?

If you’d like to support me, get my book on Swift Concurrency:

Interested in discussing the new async/await Swift syntax and concurrency? Hit me up on twitter at https://twitter.com/icanzilb.