Actors, the cooperative pool and concurrency

After I started doing some benchmarking how different APIs perform, when used to build a simple counter, I got really interested to learn more about how the new Swift concurrency model behaves at runtime.

So in this post I’ll use a couple of actors and make them do concurrent computations and check how the thread list and dispatch-queues look like in the debugger.

The Test Setup

I’ve prepared a super-duper simple SwiftUI app that does a bunch of floating-point multiplication and division.

In this post, I’m using an Xcode 13.0 (13A233) running the test project in an iOS Simulator on my 2018 macbook pro. Keep in mind I’ll be running this in debug mode — not sure if the release pool behaves differently in release, but that’s to dig into in another post.

A Crunch Actor

I have a simple actor called CrunchActor1 that includes a single method that does some number crunching:

|

|

The point of this is to simply have an actor that does something on its thread for a little while so I can break in the debugger and look at the GCD runtime-situation.

Running a single actor method

On my first try, I’m running a single call to crunch() in a SwiftUI task:

|

|

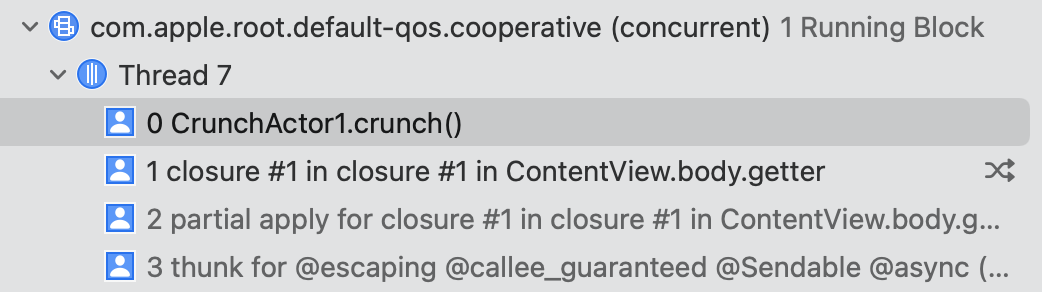

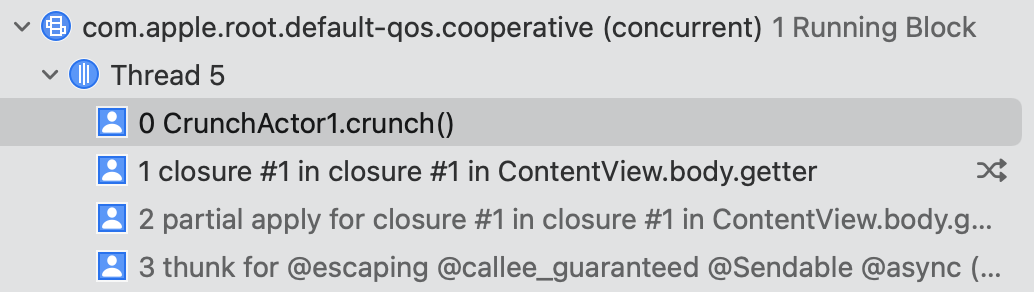

When I break inside crunch(), I get to learn a few interesting facts:

- The cooperative thread pool that was mentioned in the WWDC videos is a concurrent dispatch queue called

com.apple.root.default-qos.cooperative. - The pool-queue has a default quality of service.

- The concurrent queue is currently running one code-block in

CrunchActor1.crunch()on a single thread.

If you are familiar with GCD this really starts to paint a picture.

Also, I heard speculations that an actor is just a serial dispatch queue with compile-time guarantees but it seeems that the debugger disagrees — technically, we get a single GCD queue and that’s the cooperative pool.

Running actor methods concurrently

Next, to quickly confirm that the actor behaves as expected, I’m trying two calls to crunch() in parallel:

|

|

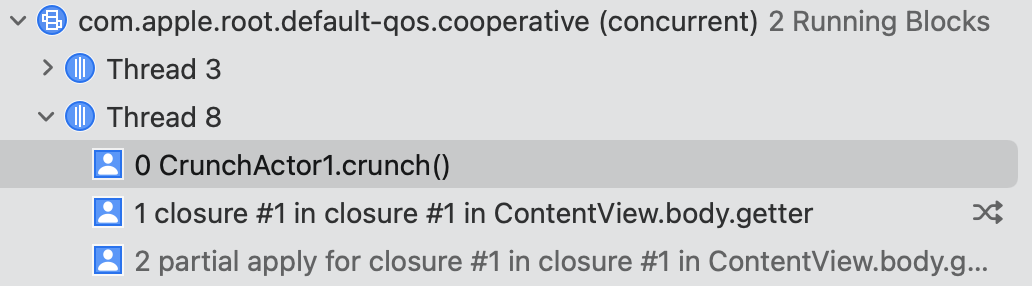

This code launches two tasks in parallel and each of them calls crunch(). The debugger shows exactly the same layout as before:

The actor transparently serializes calls to crunch() so the cooperative pool still creates a single thread to execute this code.

Running mulitple actors in parallel

So, how would the cooperative pool look like if I run two different actors in parallel? For this example I’ll use two actors — crunchActor1 and crunchActor2:

|

|

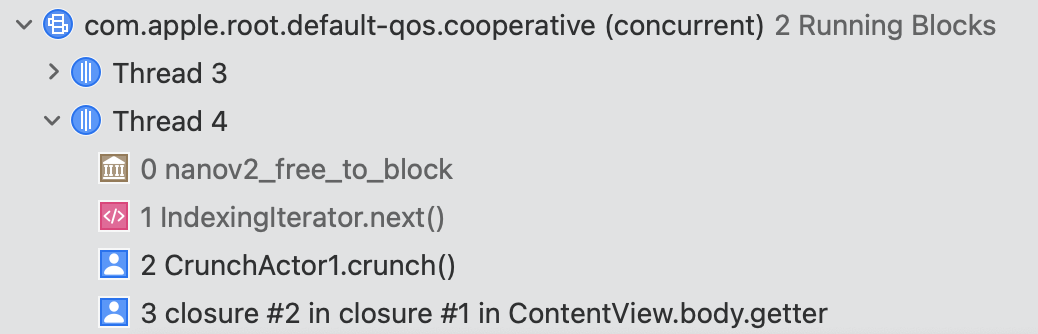

When I break in the debugger now, I see that the pool is using two threads to run the calls to my two actors:

You see that the concurrent queue is running 2 blocks simultaneously on two threads — one actor-instance crunching in parallel on each thread.

Next, is where things become a bit weird 🎃.

Running nonisolated actor code

So, I’m exploring ways to design highly-concurrent code. On a first try, I just annotated crunch() with nonisolated which removes the state-isolation harness for that method.

|

|

Xcode instantly nudges me to remove the await keywords — so I do and now I’m calling crunch() synchronously:

|

|

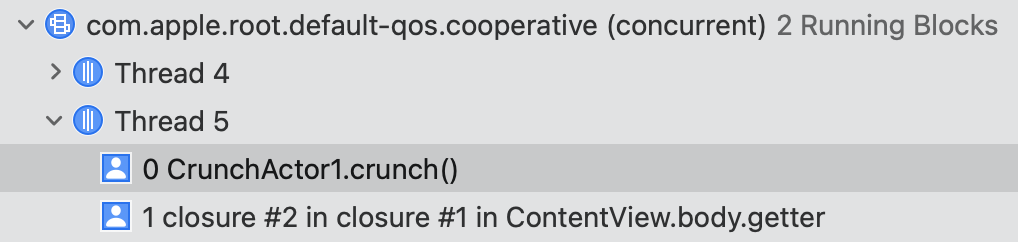

Running the debugger, I see that, yet again, only two of my four tasks run concurrently:

So, this time the calls to crunch() run free but the cooperative pool gives me only two threads at a time to run my four concurrent tasks.

Unfortunately, looking at the docs, I’m not sure how to ask for more threads so it seems I can only “spread” my work on two CPU cores 🤔. Additionally, I got feedback, that it depends on the machine hardware as to how many cores your pool can spare.

Falling back on classes

So I’m trying to figure out if maybe actors still somehow serialize or limit the execution of methods even if they are nonisolated. I’m going to ditch actors, fall back on classes and check how many threads do I get in that case:

|

|

Yet again, the cooperative pool runs only two blocks at a time on two separate threads:

So it seems that the cooperative pool has a limit on how much concurrency should you have access to (to spare you some reading, I’m skipping using TaskGroup which produces the same results).

I think to a point, these results make some sense — with this black-box limit you’re really not able to drain the system resources and make other apps unresponsive…

Falling back on DispatchQueue.concurrentPerform(…)

But what if you really, really wanted to do some good, concurrent work? So far, the only solution I found is removing my Task code altogether and falling back on DispatchQueue.concurrentPerform(...):

|

|

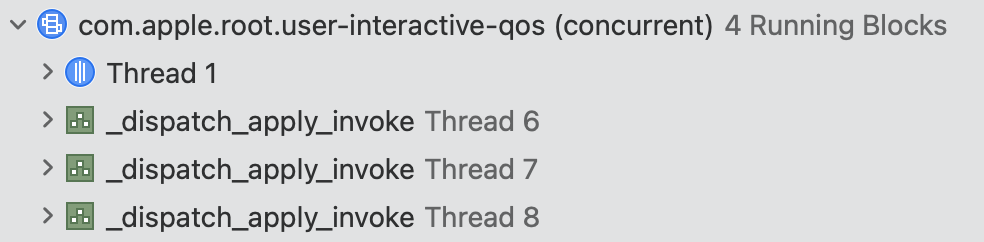

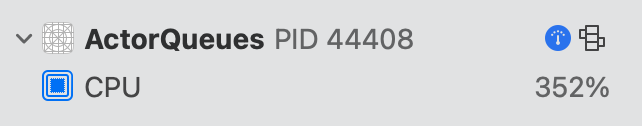

This code still produces, as pre-iOS 15, a thread explosion 🔥 that runs your parallel code unhindered:

Pay attention that this is not the cooperative pool anymore — it’s just a concurrent GCD queue created by concurrentPerform(). It runs code on four threads in parallel and is utilizing four CPU cores quite neatly:

Results

So it seems that the pool automatically allots threads to async/await code and actors. For most apps this should be perfect — the new concurrency model will only get better with time so using the defaults is the best way forward.

For apps where you need to make the most of the machine, it looks like the current solution is DispatchQueue.concurrentPerform(...) or your own operations or threads-based code.

What do you think?

Where to go from here?

Interested in the new async/await Swift syntax? Hit me up on twitter at https://twitter.com/icanzilb.